A schema file is critical in setting up the Ingestion API connector. This file describes the structure of the data that is to be streamed to Data Cloud. In this article, we’ll look at an example schema, and break down all the important components so that you can start building your own custom schemas.

Before we think about the schema file, we should make sure that we have thoroughly planned out all the different events we want to capture, and the fields we want to associate with each of those events. Let’s use an example of an e-commerce application that will stream data whenever customers add an item to their cart, and when they checkout. Here are the different fields we’re looking to ingest:

Add To Cart

| Field Name | Data Type |

|---|---|

| Timestamp | Datetime |

| User Id (PK) | String |

| String | |

| Item Price | Number |

| Item URL | String |

Checkout

| Field Name | Data Type |

|---|---|

| Timestamp | Datetime |

| User Id (PK) | String |

| String | |

| Total Price | Number |

Now that we have an idea of what’s coming in, it is time to prepare the schema file. If you check out the requirements for the Schema File, one of the first things you’ll see is that the schema must be a YAML file with a valid OpenAPI formatting.

In simple terms, YAML is a way to represent structured information, similar to JSON or XML. While mostly used for configuration files, here we use it to describe the nitty gritty of our data model. Instead of getting lost in the details of what exactly the OpenAPI format specifies, or what properties are supported by a YAML file, lets look at an example schema and try to break it down into its core components.

We might ignore some parts of the YAML structure, and some components of the OpenAPI spec, but we will make sure to cover everything that’s relevant in creating a schema for your own connector. Here’s a sample schema that can be used to stream customer engagement events for our use-case:

openapi: 3.0.3

components:

schemas:

cart_add:

type: object

properties:

timestamp:

type: string

format: date-time

user_id:

type: string

email:

type: string

format: email

item_price:

type: number

item_url:

type: string

format: url

checkout:

type: object

properties:

timestamp:

type: string

format: date-time

user_id:

type: string

email:

type: string

format: email

total_price:

type: number

General YAML structure and the schemas property

Although the above schema is fairly small and perfect for demonstration purposes, to digest this schema even easier, we’ll look at it from the top-down. Let’s first focus on the structure of the outermost properties.

openapi: 3.0.3

components:

schemas:

cart_add:

....

checkout:

....

In a YAML file, objects and their related properties are connected via nested white-space formatting. For example, in the partial structure above, we have two keys nested inside the schemas property. This informs us that there are two Data Stream objects in our schema — card_add and checkout, and any other keys nested inside them will, in turn, form the description of those Data Streams.

Connectors connect Data Cloud to a data source, and Data Streams bring in specific objects to Data Cloud (DSOs). A single connector can have multiple streams ingesting multiple entities.

Data Stream Objects

....

schemas:

....

checkout:

type: object

properties:

timestamp:

....

user_id:

....

email:

....

total_price:

....

Every Data Stream object within schemas will have their type and properties defined. The type is always going to be ‘object’, and properties will contain all the fields that make up the data model for that specific Data Stream. In the schema file above, the data model for the checkout stream is made up of four fields — timestamp, user_id, email, and total_price. Now that we have the stream and its fields defined, we need to specify what type of data each of those fields hold.

The

typeproperty is not strictly required for Data Cloud Schema, but it’s good to include as it is part of the specification.

Data Types

....

checkout:

....

properties:

timestamp:

type: string

format: date-time

user_id:

type: string

email:

type: string

format: email

total_price:

type: number

Fields for a Data Stream must have their types defined, and these types much match the data that’s ingested via the connector. At the time of this writing, only three values are supported for type — string, number and boolean.

However, string supports different formats and can be used to represent timestamps, email addresses, phone numbers, percentages, and URLs. For example, you might have noticed the timestamp field in the checkout schema above, and this type of field, which represents the time when an event occurred, is typically a string type with a date-time formatting.

A timestamp field of a ‘date-time’ format is required for any Engagement type streams

Debugging Schemas

Now you’re ready to start creating your own schema files. If you have trouble getting the format exactly right, and can’t pinpoint where exactly the error could be, here’s a useful tool to help you in debugging. After you paste in your schema to the editor, it’ll detect all the objects you have properly configured, and transform them into their interactive versions.

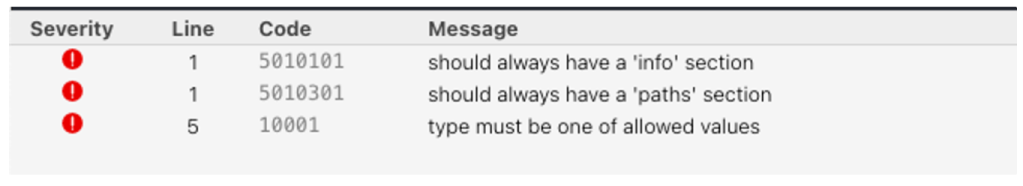

There’s an error panel at the bottom that displays all potential misconfigurations you might have in your schema. You may safely ignore the errors that ask you to have ‘info’ and ‘paths’ section as they’re not relevant to our use case, but any other lines in the panel should be helpful in debugging your schemas.

As Data Cloud evolves, there will inevitably be breaking changes in the future that’ll deem some of the information presented in this article obsolete. While we’ll try to keep up with the changes and update the article, please make sure to consult the official documentation if something doesn’t work for you.